Performance testing saves companies millions of dollars. According to a report by Dun & Bradstreet, 59% of Fortune 500 companies experience a minimum of 1.6 hours of downtime each week. Let’s do the math real quick. On Average, a Fortune 500 company employs 52,810 people. If each employee earned only $10 an hour, it would cost a company $528,100 a week in lost productivity – or $27,461,200 a year.

That’s a lot of money that just...disappears.

It is in everyone’s best interest that software performance testing is done thoroughly. Let's explore the importance of performance testing, different types, common problems, and valuable tools.

What is Performance Testing?

Performance testing checks that software can run at a high level under the expected workload. Developers want to avoid creating software that’s responsive and fast when only one user is connected but becomes sluggish when dealing with multiple users.

QA testing isn’t only concerned with bugs. The software’s speed, responsiveness, and resource usage are additional concerns. Performance testing focuses on identifying and addressing performance bottlenecks. Bottlenecks are identified by simulating user traffic. Ideally, QA testers want to run performance tests under real-world circumstances. This is one of the software’s first voyages out of the haven of ideal circumstances and into the uncertain world of end-user experience.

Why Should I Test Performance?

It provides stakeholders with concrete information about the speed, stability, and scalability of the software. Without performance testing, the software risks suffering issues with speed and reliability on release.

We already mentioned the productivity cost of when a system crashes. In many cases, that alone is reason enough. Though not every software is used internally, it is shipped and sold to customers. In that scenario, performance testing becomes even more critical because the last thing you want is a hoard of customers upset with the quality of your product.

In the developmental stage, performance testing provides a clearer picture of what needs to be improved in terms of speed, stability, and resource usage. Without it, the software could be released with several serious errors, such as slow runtime with multiple users, crashing due to user overload, and inconsistent user experience across different operating systems and browsers.

Testing for bugs won’t give a clear picture of how the software will perform under load. It’s important that performance testing is carried out independently and with the sole purpose of finding bottlenecks. Good performance testers know that speed isn’t the only marker of performance. For example, an application that loads fast but uses 100% of the user's CPU isn’t performant. Such an example would cause the end-user many headaches, such as overheating, shortened CPU lifespan, slower performance across multiple applications, and occasional crashes.

How to Do Performance Testing

How you do performance testing varies depending on the software. There are several different approaches a QA tester can take. It’s a form of non-functional testing, meaning it’s largely unconcerned with the user interface.

Performance testers want to ensure the internal components are as fine-tuned as possible. They’re the pit crew in an F1 race.

The type of testing carried out will depend on the methodology the organization is using. If they follow the traditional waterfall approach to software development, then the performance testers likely won’t get their hands on the product until development has finished. However, if the organization is using the agile methodology, then agile performance testing will likely happen throughout the development process.

Types of Performance Testing

Some require manual testing, and others require automation testing, though with the rapid increase of automation testing and more effective, reliable tools being developed each day, there is a significant preference for automation testing in these situations because it requires many virtual users to run the software as if they were actual end-users. This is hard to replicate manually as it would require many more testers than the team will likely employ.

Just because much of performance testing is handled by machines doesn’t mean there aren’t important distinctions between types of tests. A QA tester should understand the different types of performance testing so they know the best tool for the job.

Let's break down the different types of performance testing and what each type of test hopes to accomplish.

Capacity Testing

Tests how many users the system can handle before performance dips below acceptable levels. By testing a software’s capacity, it helps developers anticipate issues in terms of scalability and future user-base growth.

Load Testing

Confirms that the system can handle the required number of users and still operate at a high level of performance. Load testing tools ensure there are no day-to-day issues in performance.

Volume Testing

Checks that the software can handle and process a large amount of data simultaneously without breaking, slowing down, or losing any information.

Stress Testing

Intentionally tries to break the software by simulating a number of users that significantly exceeds expectations. The launch day of a new iPhone and the sudden spike in user traffic on the Apple website is an excellent example of a stress test in the real world.

Soak Testing

Simulates high traffic for an extended period. Checks the software’s ability to tolerate extended periods of high traffic.

4 Common Performance Problems

Performance testers typically encounter at least one of these four problems during testing.

- Long Load Time – Nobody enjoys staring at their screen for 30-60 seconds waiting for an application to load. It’s boring, especially if it’s an app you open multiple times a day. Except for some seriously beefy software, most applications, web pages, and software should be able to open in under a few seconds. Load tests typically catch any software that has trouble opening within an acceptable time frame.

- Poor Response Time – Similar to the problem of long load times. It’s equally frustrating when, after finally opening the app, navigating between menus or inputting data also takes 30-60 seconds to complete. Think about the apps you use every day – how many of them make you wait around for a page to load? It's likely not very many. Long load times make people lose interest.

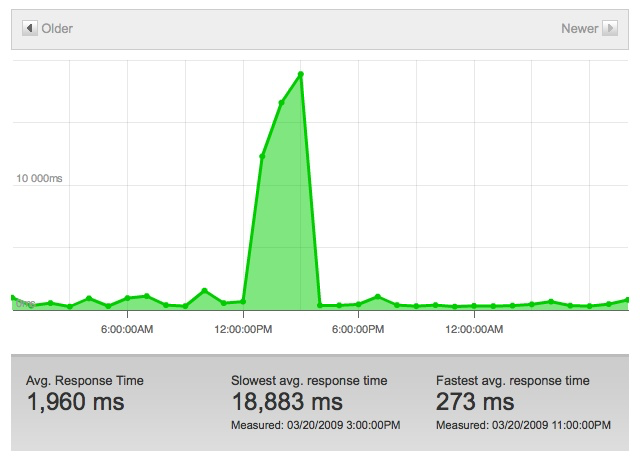

- Poor Scalability – This is casually called the ‘slashdot effect’ or the ‘internet hug of death.’ Have you ever heard about an interesting website someone shared with you on Facebook, and when you clicked on the link, the page didn’t load? That’s because you and a million others wanted to check out the cool little site, and it didn’t have the infrastructure to handle a sudden influx of users. A graph of their site traffic would look something like this:

4. Bottlenecking – Bottlenecks are caused when a system poorly allocates its processing power. If your software requires users' CPU to run at 100%, they have no memory left to spare to run additional tasks. This is bad and often leads to overheating and a significant drop in performance. There are several places where bottlenecking can occur. Some of the most common are:

- CPU

- Memory

- GPU

- Disk Usage

Best Performance Testing Tools

Which performance testing tool is right for you depends on your project and objectives. Some, such as Jmeter, are very good at running load and stress tests. It also depends on your budget. Smaller companies may opt for one of many high-quality, free, open source performance testing tools to reduce costs.

Open Source Performance Testing Tools

One top-rated open-source testing tool is Jmeter. It has been a go-to option for small companies looking to run effective tests. Jmeter performance testing carefully analyzes the server performance under load. It allows you to execute load and stress tests to check if your software can handle the normal and maximum number of expected users.

When the performance tests are finished, Jmeter allows you to view the test results in several easy-to-understand ways. One option is to print your results in graph form. Here’s what Jmeter graphs look like:

When reading the graph, the most crucial parameter is the throughput (green line), which shows the number of requests sent during the test. The higher the number, the better. It shows how many requests your software can handle per minute. In this example, it’s 8,003 requests per minute.

Other top tools:

There are some drawbacks to open source performance testing. One is that all the simulated users are running on company servers. This means the tests are done in ideal performance rather than real-world conditions. In small companies that don’t expect a substantial amount of load, this may suit their needs fine. As the company scales up, however, it may begin looking at purchasing a premium performance testing tool.

Here’s a brief list of some premium performance testing tools:

Performance Testing is generally similar across operating systems. Many of the Mac performance testing tools will run on Windows.

Final Thoughts

Performance testing is an indispensable component for ensuring the reliability and efficiency of software, especially for SaaS companies experiencing rapid growth. By utilizing top tools, QA leads gain invaluable insights into their systems' scalability, endurance, and ability to handle high traffic. Subscribe to our newsletter for more insights from quality assurance pros!