Editor’s Note: Welcome to the Leadership In Test series from software testing guru & consultant Paul Gerrard. The series is designed to help testers with a few years of experience—especially those on agile teams—excel in their test lead and management roles.

In the previous article we looked at tools you’ll be using regularly as a test manager. In this article, we’ll be focusing more specifically on test execution tools and what you get, and don’t get, with them.

Sign up to The QA Lead newsletter to get notified when new parts of the series go live. These posts are extracts from Paul’s Leadership In Test course which we highly recommend to get a deeper dive on this and other topics. If you do, use our exclusive coupon code QALEADOFFER to score $60 off the full course price!

Test (Execution) Automation

The topic of test automation (usually through a graphical user interface) is high up on most tester’s and test manager’s agendas. Superficially these tools appear to hold much promise, but many organisations wanting to automate some or all of their functional testing hit problems.

We won’t go into too much technical detail in this article, but we’ll touch on some of the issues relevant to test and project managers who need to create a business case for automation. We’ll look at:

- What You Do and Don’t Get From Test Execution Tools

- Graphical User Interface (GUI) Test Automation

- API and Service Test Automation

- Regression Testing

- Test Automation Frameworks

It’s important to set realistic expectations for what automation can and cannot do, so the next few sections might appear somewhat pessimistic.

What You Do and Don’t Get From Test Execution Tools

Whether you are testing Windows desktop applications, websites or mobile devices, the principles of test automation, the benefits and pitfalls are similar. What you get from tools are:

- A tireless, supposedly error-free robot that will run whatever scripted tests you like, on-demand, as frequently as you wish.

- A precise comparison between the output and/or outcome of tests, with prepared expected results, at whatever level of granularity you are willing to program into the tool.

What you don’t get is:

- Flexibility and smooth responses to anomalies, failures or questionable behaviour.

- The eyes and thinking of a human tester, capable of making decisions to explore, question, experiment and challenge the behaviour of the system under test.

- Tests for free. You still have to design tests, prepare test data and expected results for example.

- While test execution tools offer a range of functionalities, they often lack advanced data storage capabilities. For a more comprehensive solution, you might want to explore the best database management software available.

Let’s explore the significance of these benefits and problems.

If you are a reasonably competent programmer, it is straightforward to implement procedural tests run by humans as automated procedures in your tool’s scripting language for the tool to execute accurately.

As long as the environment and data used by your test application are consistent, you can expect your tests to run reliably again and again. This is the obvious promise of automated test execution.

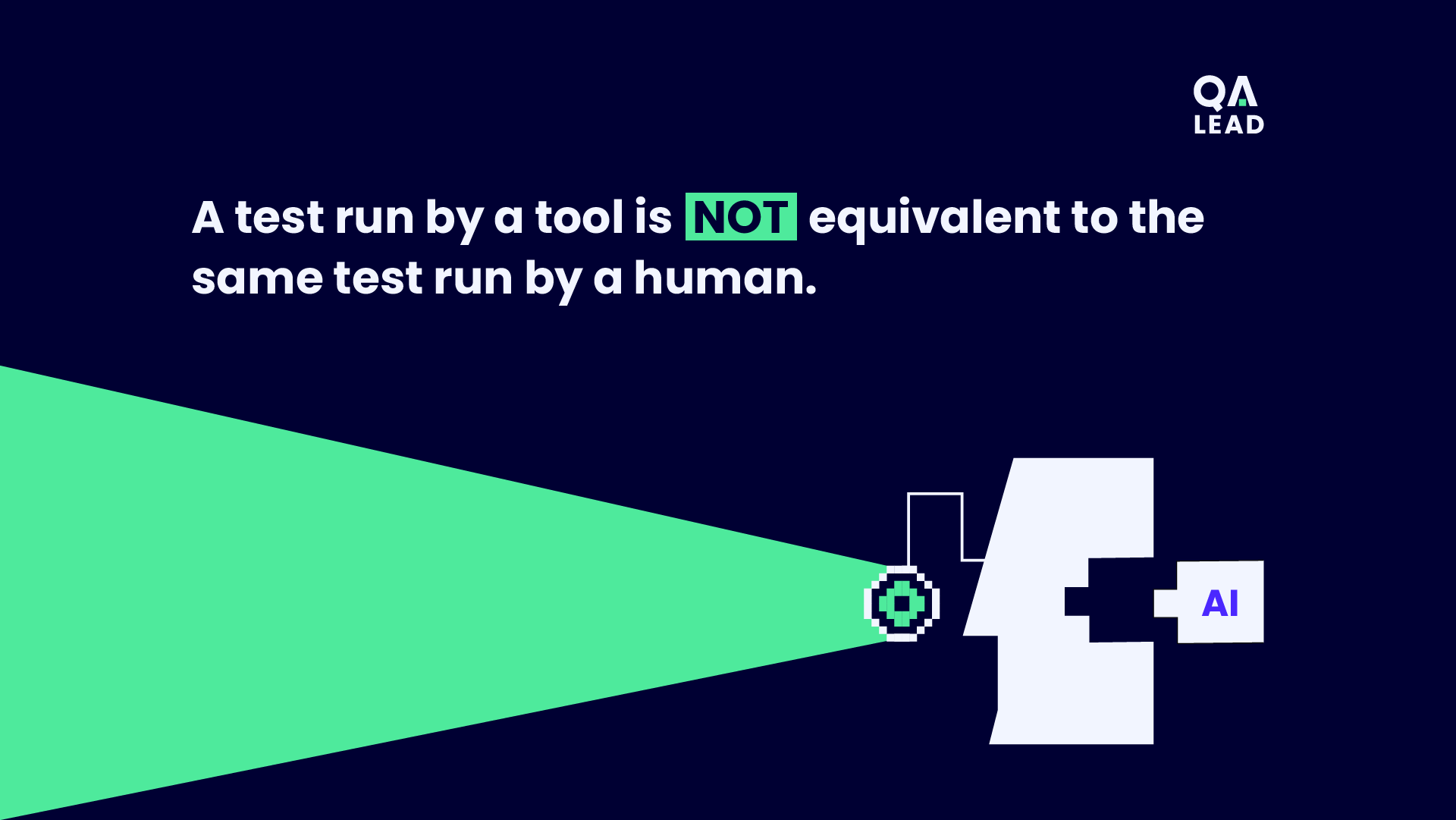

But automated tests do not accurately simulate what (good) testers do, when testing.

A test run by a tool is NOT equivalent to the same test run by a human.

A test, if scripted, might tell the tester what to do. But testers can look beyond the simple comparison between an expected result and the displayed result on a screen.

Testers must navigate to the location of the test and be vigilant for anomalous behaviour – the response of commands; the speed of response; the appearance of the screen; the behaviour of objects affected by the changing state of the application.

The human tester, when observing an anomaly, might pause, retrace their steps, or look deeper into the application or data before concluding the application works correctly (or not) and whether to continue with the scripted test, to adjust the data and script of the test, or to carry on as intended.

Humans are flexible whereas tools will only do exactly what you program them to do. In a screen-based transaction, the tool enters data, clicks a button and checks for a message or displayed result – and that is all. With human testers, you get so much more that we take for granted.

In principle, it is possible to program a tool to perform all the checks that a human performs instinctively – but you will have to write a lot of code and spend time debugging your tests. Even then, you don’t get the human’s ability to decide what to do next – to pause, carry on, change the test midstream, or explore an anomaly more deeply.

Nowhere is the inflexibility of tools more evident than when reacting to the loss of synchronisation of the script with the system under test. Perhaps an expected result does not match, or the system crashes, or the behaviour of the UI (a changed order of fields or a new field, for example) differs from what the script expects. What does the tool do? It attempts to carry on and a torrent of script failures or crashes ensues.

Yes, you can and should have unplanned event handlers in your script to trap test failures. Loss of synchronisation, mismatches between system state and test data, changes in the order of fields, new fields or removed fields are things the tester can recognise and action on without testing grinding to a halt. Tools can’t do this without a lot of programming effort on your part.

There are disadvantages in using humans to follow scripted tests, too. Testers can become obsessed with obeying the script and forget to use their observational skills. They might overlook blatant anomalies because they concentrate on following the script. This less-than-optimal approach is exactly what we get from test execution automation.

Blind adherence to test scripts is a bad idea for human testers, but it’s the best we can do with test automation.

In this comparison of testers following scripts to tools running tests, we haven’t considered what exploratory testers do. The exploratory approach gives testers the freedom to investigate and test wherever and however they want.

Clearly, tools cannot simulate this activity. More importantly, tools cannot scope, prioritise, and model a system’s functionality and risks and design tests accordingly.

Test analysis and design activities are required regardless of whether a test is run by a human or tool.

There are further restrictions on what test execution tools can do:

- Test execution tools do exactly what you program them to do – no more, no less

- Tools don’t usually perform environment and application builds and configuration, test data loading

- Tools don’t do test case or script design, test data and expected results preparation

- Tools can’t make thoughtful decisions when unexpected events occur.

To optimize your test execution processes, integrating them with robust test management software can offer streamlined workflows and enhanced reporting.

Graphical User Interface (GUI) Test Automation

It has been twenty-five or so years since GUI test automation tools entered the market, but the number of failed GUI test tool implementations is still high. This is mostly because expectations for automation are set too high – and tools used without discipline will never meet them.

GUI test automation tools have a reputation for being easy to use as products, but hard to manage when the applications under test (and therefore test scripts) need to change. These tools are very sensitive to changes in the user interface. Changes in location, order, size, and the addition or removal of screen objects can all affect scripts. More technical changes, such as the renaming of screen objects or the amendment of ‘invisible’ embedded GUI libraries, cause problems too.

GUI test automation works best when there is discipline regarding:

- The development process and changes and releases are carefully managed. For example, changes are impact analysed and notified to testers.

- The test script development approach. Experienced test automation engineers adopt systematic naming conventions, directory structures, modular, reusable components, defensive programming techniques and so on.

Test automation scripting is a task that requires more than basic programming skills and, above all, experience of automation and the tool used. Where tests are automated at scale, there is also a need for design skills.

No matter how tools are described by vendors – “scriptless”, “usable by non-programmers” or “users can automate too” – you still need a programmer’s mentality, design skills, and a systematic approach.

API And Service Test Automation

Where there are components or functionality deployed as services, called by thin or mobile clients, testing will be performed using an API or via service calls. This mode of testing is performed using either custom code written by developers or using dedicated API or service testing tools. At any rate, the usual approach is to automate testing one way or another.

Because the API is ‘closer’ to the code to be tested, tests can be much more focused on the functionality to be tested. Certainly, the complexities of navigation through the UI and testing through the UI itself can be bypassed. For this reason, the general rule is:

If the functionality under test (at a code or component level) can be tested by a function call, an API, or service call, then automation will be easier – and is recommended.

Testing through the API has definite advantages.

- Although you’ll have to use or write code, the tests themselves will be much easier to apply (there is no UI to worry about).

- Once an API call is scripted, it’s just a case of tabulating the range of test cases you want to apply. There is no limit to the number of tests you can apply.

- API-based tests usually run much faster, making this style of testing well-suited to continuous delivery approaches where the deployment pipeline is highly automated and needs to be fast to respond.

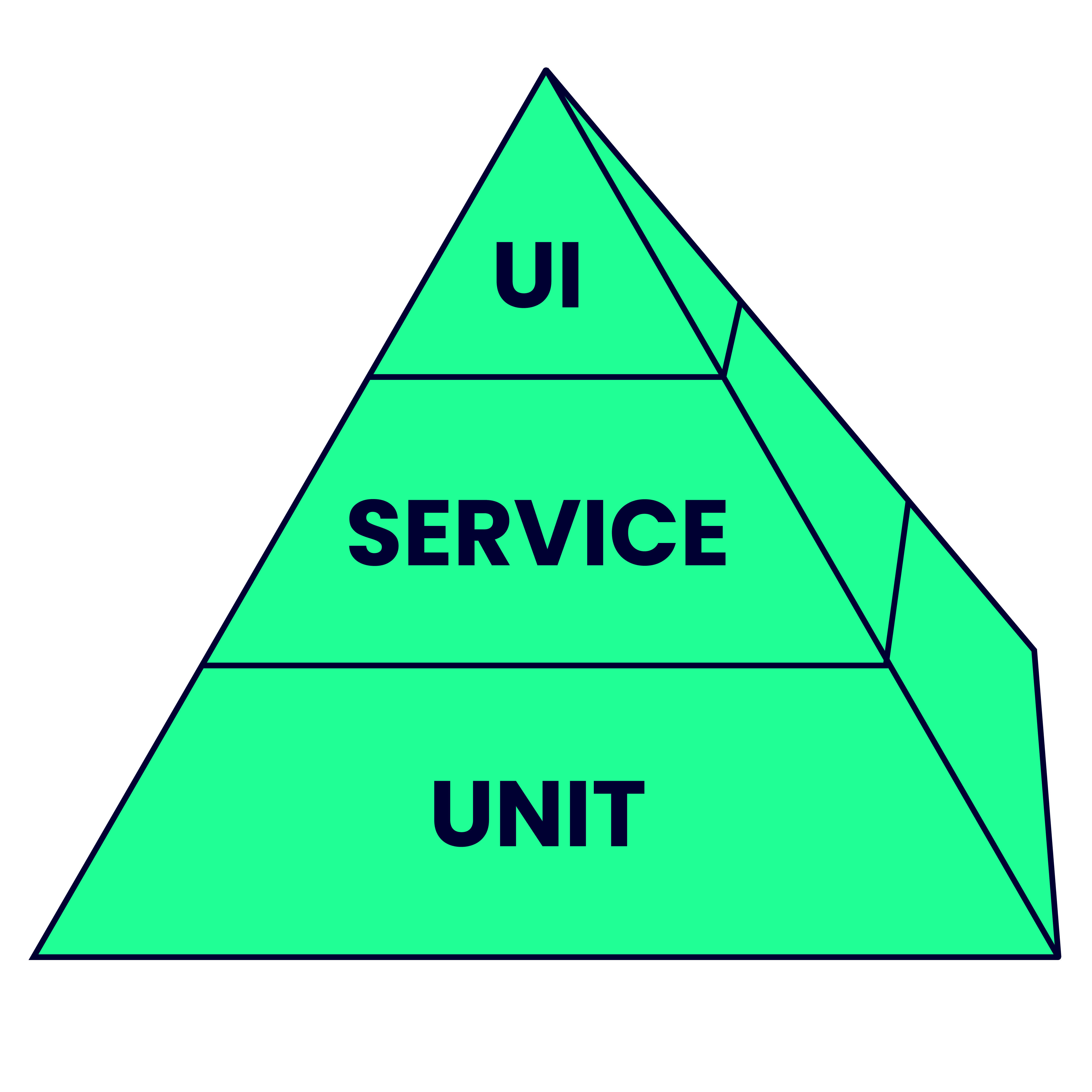

The ‘test automation pyramid’ (a Google search will show hundreds of examples of the graphic) has been adopted almost universally to present the recommendation that test automation efforts should focus more on developer or API testing rather than through the GUI.

- Use UI tests where the user workflow must be exercised, but in low volume because tests are expensive and can be slow to run.

- Use API or service calls where the functionality under test can be isolated and where speed and ease of automation are essential.

Regression Testing

The classic use of automated tools is in the execution of regression tests. These tests are run once and trusted to be accurate representations of expected behaviour. When either the application code under test, associated reusable libraries, or the test environment changes, these tests provide some confidence that the required functionality has not been adversely affected by the change.

You can imagine the automated tests as being like a mould into which the system under test fits. Of course, the tests should ‘pass’ all of the checks that were previously run. By re-running them, you are trying to demonstrate functional equivalence of the new software version compared to the previous one. There are some straightforward principles you’ll need to follow:

- Firstly, the tests and checks performed need to be selected to give you confidence that unwanted changes in behaviour are not present. These tests act like a ‘trip wire’ to detect differences in behaviour.

- When bugs are fixed, or other software changes are made, there may be instances of behaviours that are altered (correctly) but, when encountered by your tests, will fail a check. Either you must change your tests in advance or let them fail and correct them afterwards. Sometimes, it’s faster to drop failing tests entirely and create new tests from scratch to replace them. At any rate, these altered tests must then be run to completion and pass.

- If the system under test is not stable, having many bugs or undergoing extensive changes between releases, it may not be viable to maintain a set of automated tests economically. Although the system functionality doesn’t change, it’s possible the user interface is still evolving – an unstable UI makes automation harder to maintain also. The cost of test failure investigation and test maintenance may outweigh their usefulness. It might be wise to test the less stable parts of the system manually until they stabilise.

Testing through the user interface can be cumbersome, expensive and slow to execute sometimes. Before you embark on GUI test automation, or even automate a single script, it is prudent to consider whether testing the key functionality would be easier, faster and more economic using an API.

The classic use of automated tools is in the execution of regression tests. To ensure you're using the most effective tools for this, see our list of top software testing tools

Test Automation Frameworks

Testing frameworks are an essential part of any successful automated testing process. Think of them as a set of guidelines to use for creating and designing test cases. Using them can increase your testing team’s speed and efficiency, improve test accuracy, lower risks, and reduce test maintenance costs. We’ll now take a look at two of these.

While discussing test automation frameworks, it's essential to consider the role of QA automation tools in shaping an effective testing strategy.

Unit Test Frameworks

Unit test frameworks have existed for some years and are extensively used by programmers to test their code. Developer tests tend to be quite localised – after all, they usually test a single component with interfaces to other components or databases mocked or stubbed out. So, although unit tests need set-up and tear down steps before and after tests run, these tasks tend to be limited to running tests of a single component. Separate suites of tests would exist for every component.

Unit tests created by developers are usually version-controlled and managed in parallel with component code. Typically, Continuous Integration facilities take the source code and all unit tests and run them after every commit of new code and/or tests. All developers see the outcome of these tests, so a test failure in CI becomes very visible. Fixing failing tests and code is a high priority in teams that use CI in this way so that the latest version of the system passes all CI tests all the time.

Integration and System Test Automation Frameworks

In recent years, GUI test tools have been integrated with CI tools using virtualised test devices, environments, and execution through command-line interfaces. Many organisations have been able to completely integrate the execution of unit, API-level and GUI tests through CI services.

However, many test teams still operate their own test environments separate from development or CI services. These teams tend to build their own test automation frameworks because CI tools are too developer-oriented or proprietary tools are inadequate.

GUI tests tend to implement end-to-end tests or user journeys using different applications, hardware devices, and environments. These tests need to seamlessly set up integrated test environments and prepared test data on differing platforms, and this requires privileged, complex procedures and varied technical environments to operate. The vast majority of teams who use GUI test automation build their own unique, but effective, automation frameworks.

GUI automation frameworks vary in sophistication from unit-test-like tools to complex and comprehensive facilities required to manage environments, application builds, huge test data loads, messaging and synchronisation across platforms and environments, and messaging and notifications to team members.

Some proprietary tools come with utilities or harnesses to manage, execute and report from suites of automated tests. These are of varying value, so many organisations write their own test automation frameworks to extend their functionality. In recent years, the scope of automation frameworks has grown, and there is no single or simple definition anymore, so we’ll now look at what test automation frameworks can do for software teams.

A test automation framework extends the functionality of test execution engines.

Test Suite Configuration

The framework integrates automated tests into meaningful collections, or clusters, of tests. These collections are configurable in that tests can be run in a hierarchy of sequences, groups, or arbitrary selections. These tools might include features to data-drive tests using prepared tables of test data. This is the simplest kind of framework — popular tools usually offer some form of test suite configuration.

Set-up and Tear-Down

The framework handles all set-up and tear-down activities for a single test, collection or entire suite. Set up might entail creating test environments from scratch, full configurations, and pre-loading test databases and other data sources from scratch. Tear-down might involve the clean-up of test data or resetting or deletion of partial or complete environments. The framework might integrate with, and be under the control of, pipeline orchestration tools.

Exception Handling

A failure in any test – whether in the system under test or a loss of synchronisation – can be handled consistently by reporting the event and usually allowing the remaining tests in that collection to continue to run. The framework can be programmed to handle test check failures, loss of synchronisation, execution time-outs and other selected test outcomes – each with custom procedures.

Logging and Messaging

The framework logs test execution and execution status consistently across all test collections. The framework either triggers reports from the tools that execute tests or provides a consistent reporting journal of all test setup, execution and tear-down activity. The framework might interact with ChatOps bots to inform the team of exceptions and allow team members to pause, stop, repeat or restart test suites.

Test Abstraction with Domain-Specific Language

Two distinct types of frameworks that abstract test execution code to models, or human-readable or non-technical text, have emerged in the market:

- Keyword-driven frameworks allow tests to be defined using keywords. Calls to the execution engine features are implemented as English-like commands with placeholders for parameters or data. User-defined, reusable modules can be defined and called using plain text commands in the same way. Frameworks exist for all manner of interfaces including GUI, service, API, command-line interpreters and so on. Scripts can exercise functionality across different operating and device platforms.

- Behaviour-Driven Development (BDD) frameworks allow stories and scenarios (examples) that describe feature behaviour to be captured using a domain-specific language (DSL). The most popular language is the so-called Gherkin, which uses the “given … when … then …” language structure to capture examples. Given/when/then effectively represent pre-conditions, steps, and post-conditions of test cases. BDD tools convert the given/when/then text to ‘step calls’ in a programming language. The developer (or tester) must implement the ‘fixtures’ or code to make calls to a test execution engine. In this way, the language of a requirement (the story) can be directly connected to the text execution code.

Model-based frameworks

In this case, tools allow a model of the system under test to be created. This might be automatically derived from a webpage where the tool scans the HTML, detects forms and fields, and builds a model from which paths through the forms can be either generated automatically or selected by the tester. Object maps for mobile apps or other smart devices can also be built manually. Using the paths through the object map, calls to the test execution engine features are made in a similar way to the BDD and keyword-driven tools. In principle, tests can be built graphically without code. Tools in this space are relatively new and improving rapidly. Expect their use to accelerate in the future.

Sign up to The QA Lead newsletter to get notified when new parts of the series go live. These posts are extracts from Paul’s Leadership In Test course which we highly recommend to get a deeper dive on this and other topics. If you do, use our exclusive coupon code QALEADOFFER to score $60 off the full course price!

Related Read: 10 BEST WEB SERVER MONITORING TOOLS